Table of contents

- Governance attacks in practice

- The fundamental challenge: Indistinguishability

- A framework for assessing and addressing vulnerability

Many web3 projects embrace permissionless voting using a fungible and tradable native token. Permissionless voting can offer many benefits, from lowering barriers to entry to increasing competition. Token holders can use their tokens to vote on a range of issues — from simple parameter adjustments to the overhaul of the governance process itself. (For a review of DAO governance, see “Lightspeed Democracy.”) But permissionless voting is vulnerable to governance attacks, in which an attacker acquires voting power through legitimate means (e.g., buying tokens on the open market) but uses that voting power to manipulate the protocol for the attacker’s own benefit. These attacks are purely “in-protocol,” which means they can’t be addressed through cryptography. Instead, preventing them requires thoughtful mechanism design. To that end, we’ve developed a framework to help DAOs assess the threat and potentially counter such attacks.

The problem of governance attacks isn’t just theoretical. They not only can happen in the real world, but they already have and will continue to.

In one prominent example, Steemit, a startup building a decentralized social network on their blockchain, Steem, had an on-chain governance system controlled by 20 witnesses. Voters used their STEEM tokens (the platform’s native currency) to choose the witnesses. While Steemit and Steem were gaining traction, Justin Sun had developed plans to merge Steem into Tron, a blockchain protocol he had founded in 2018. To acquire the voting power to do so, Sun approached one of the founders of Steem and bought tokens equivalent to 30 percent of the total supply. Once the then-current Steem witnesses discovered his purchase, they froze Sun’s tokens. What followed was a public back-and-forth between Sun and Steem to control enough tokens to install their preferred slate of top 20 witnesses. After involving major exchanges and spending hundreds of thousands of dollars on tokens, Sun was eventually victorious and effectively had free reign over the network.

In another instance, Beanstalk, a stablecoin protocol, found itself susceptible to governance attack via flashloan. An attacker took out a loan to acquire enough of Beanstalk’s governance token to instantly pass a malicious proposal that allowed them to seize $182 million of Beanstalk’s reserves. Unlike the Steem attack, this one happened within the span of a single block, which meant it was over before anyone had time to react.

While these two attacks happened in the open and under the public eye, governance attacks can also be conducted surreptitiously over a long period of time. An attacker might create numerous anonymous accounts and slowly accumulate governance tokens, while behaving just like any other holder to avoid suspicion. In fact, given how low voter participation tends to be in many DAOs, those accounts could lie dormant for an extended period of time without raising suspicion. From the DAO’s perspective, the attacker’s anonymous accounts could contribute to the appearance of a healthy level of decentralized voting power. But eventually the attacker could reach a threshold where these sybil wallets have the power to unilaterally control governance without the community being able to respond. Similarly, malicious actors might acquire enough voting power to control governance when turnout is sufficiently low, and then try to pass malicious proposals when many other token holders are inactive.

And while we might think all governance actions are just the result of market forces at work, in practice governance can sometimes produce inefficient outcomes as the result of incentive failures or other vulnerabilities in a protocol’s design. Just as government policymaking can become captured by interest groups or even simple inertia, DAO governance can lead to inferior outcomes if it’s not structured properly.

So how can we address such attacks through mechanism design?

Market mechanisms for token allocation fail to distinguish between users who want to make valuable contributions to a project and attackers who attach high value to disrupting or otherwise controlling it. In a world where tokens can be bought or sold in a public marketplace, both of these groups are, from the market perspective, behaviorally indistinguishable: both are willing to buy large quantities of tokens at increasingly high prices.

This indistinguishability problem means that decentralized governance doesn’t come for free. Instead, protocol designers face fundamental tradeoffs between openly decentralizing governance and securing their systems against attackers seeking to exploit governance mechanisms. The more community members are free to gain governance power and influence the protocol, the easier it is for attackers to use that same mechanism to make malicious changes.

This indistinguishability problem is familiar from the design of Proof of Stake blockchain networks. There as well, a highly liquid market in the token makes it easier for attackers to acquire enough stake to compromise the network’s security guarantees. Nevertheless, a mixture of token incentive and liquidity design makes Proof of Stake networks possible. Similar strategies can help secure DAO protocols.

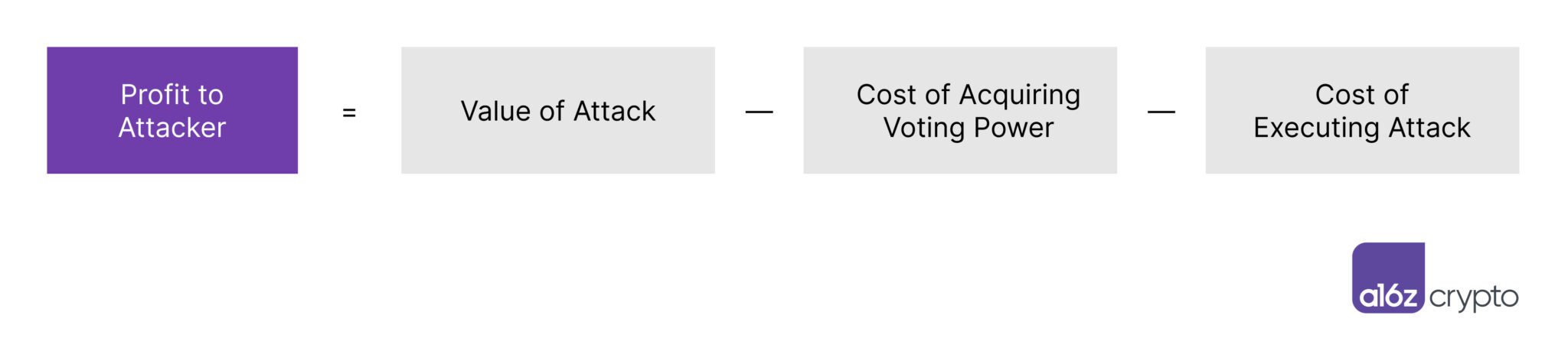

To analyze the vulnerability different projects face we use a framework captured by the following equation:

For a protocol to be considered secure against governance attacks, an attacker’s profit should be negative. When designing the governance rules for a project, this equation can be used as a guidepost for evaluating the impact of different design choices. To reduce the incentives to exploit the protocol, the equation implies three clear choices: decrease the value of attacks, increase the cost of acquiring voting power, and increase the cost of executing attacks.

Limiting the value of an attack can be difficult because the more successful a project gets, the more valuable a successful attack may become. Clearly a project should not intentionally sabotage its own success just to decrease the value of an attack.

Nevertheless, designers can limit the value of attacks by limiting the scope of what governance can do. If governance only includes the power to change certain parameters in a project (e.g., interest rates on a lending protocol), then the scope of potential attacks is much narrower than when governance allows fully general control of the governing smart contract.

Governance scope can be a function of a project’s stage. Early in its life, a project might have more expansive governance as it finds its footing, but in practice governance may be tightly controlled by the founding team and community. As the project matures and decentralizes control, it may make sense to introduce some degree of friction in governance – at minimum, requiring large quorums for the most significant decisions.

A project can also take steps to make it harder to acquire the voting power needed for an attack. The more liquid the token, the easier it is to require that voting power — so almost paradoxically, projects might want to reduce liquidity for the sake of protecting governance. One could try to reduce the short-run tradability of tokens directly, but that might be technically infeasible.

To reduce liquidity indirectly, projects can provide incentives that make individual token holders less willing to sell. This can be done by incentivizing staking, or by giving tokens standalone value beyond pure governance. The more value accrues to token holders, the more aligned they become with the success of the project.

Standalone token benefits might include access to in-person events or social experiences. Crucially, benefits like these are high-value to individuals aligned with the project but are useless for an attacker. Providing these sorts of benefits raises the effective price an attacker faces when acquiring tokens: current holders will be less willing to sell because of the standalone benefits, which should increase the market price; yet while the attacker must pay the higher price, the presence of the standalone features does not raise the attacker’s value from acquiring the token.

In addition to raising the cost of voting power, it’s possible to introduce frictions that make it harder for an attacker to exercise voting power even once they have acquired tokens. For example, designers could require some sort of user authentication for participating in votes, such as a KYC (know your customer) check or reputation score threshold. One could even limit the ability of an unauthenticated actor to acquire voting tokens in the first place, perhaps requiring some set of existing validators to attest to the legitimacy of new parties.

In some sense, this is exactly the way many projects distribute their initial tokens, making sure trusted parties control a significant fraction of the voting power. (Many Proof of Stake solutions use similar techniques to defend their security — tightly controlling who has access to early stake, and then progressively decentralizing from there.)

Alternatively, projects can make it so that even if an attacker controls a substantial amount of voting power, they still face difficulties in passing malicious proposals. For instance, some projects have time locks so that a coin can’t be used to vote for some period of time after it has been exchanged. Thus an attacker that seeks to buy or borrow a large amount of tokens would face additional costs from waiting before they can actually vote – as well as the risk that voting members would notice and thwart their prospective attack in the interim. Delegation can also be helpful here. By giving active, but non-malicious participants the right to vote on their behalf, individuals who do not want to take a particularly active role in governance can still contribute their voting power toward protecting the system.

Some projects use veto powers that allow a vote to be delayed for some period of time to alert inactive voters about a potentially dangerous proposal. Under such a scheme, even if an attacker makes a malicious proposal, voters have the ability to respond and shut it down. The idea behind these and similar designs is to stop an attacker from sneaking a malicious proposal through and to provide a project’s community time to formulate a response. Ideally, proposals that clearly align with the good of the protocol will not have to face these roadblocks.

At Nouns DAO, for example, veto power is held by the Nouns Foundation until the DAO itself is ready to implement an alternative schema. As they wrote on their website, “The Nouns Foundation will veto proposals that introduce non-trivial legal or existential risks to the Nouns DAO or the Nouns Foundation.”

* * *

Projects must strike a balance to allow a certain level of openness to community changes (which may be unpopular at times), while not allowing malicious proposals slip through the cracks. It often takes but one malicious proposal to bring down a protocol, so having a clear understanding of the risk tradeoff of accepting versus rejecting proposals is crucial. And of course a high level trade-off exists between ensuring governance security and making governance possible — any mechanism that introduces friction to block a potential attacker also of course makes the governance process more challenging to use.

The solutions we have sketched here fall on a spectrum between fully decentralized governance and partially sacrificing some ideals of decentralization for the overall health of the protocol. Our framework highlights different paths projects can choose as they seek to make sure governance attacks will not be profitable. We hope the community will use the framework to further develop these mechanisms through their own experimentation to make DAOs even more secure in the future.

***

Pranav Garimidi is a rising junior at Columbia University and a Summer Research Intern at a16z crypto.

Scott Duke Kominers is a Professor of Business Administration at Harvard Business School, a Faculty Affiliate of the Harvard Department of Economics, and a Research Partner at a16z crypto.

Tim Roughgarden is a Professor of Computer Science and a member of the Data Science Institute at Columbia University, and Head of Research at a16z crypto.

***

Acknowledgments: We appreciate helpful comments and suggestions from Andy Hall. Special thanks also to our editor, Tim Sullivan.

***

Disclosures: Kominers holds a number of crypto tokens and is a part of many NFT communities; he advises various marketplace businesses, startups, and crypto projects; and he also serves as an expert on NFT-related matters.

The views expressed here are those of the individual AH Capital Management, L.L.C. (“a16z”) personnel quoted and are not the views of a16z or its affiliates. Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by a16z. While taken from sources believed to be reliable, a16z has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation. In addition, this content may include third-party advertisements; a16z has not reviewed such advertisements and does not endorse any advertising content contained therein.

This content is provided for informational purposes only, and should not be relied upon as legal, business, investment, or tax advice. You should consult your own advisers as to those matters. References to any securities or digital assets are for illustrative purposes only, and do not constitute an investment recommendation or offer to provide investment advisory services. Furthermore, this content is not directed at nor intended for use by any investors or prospective investors, and may not under any circumstances be relied upon when making a decision to invest in any fund managed by a16z. (An offering to invest in an a16z fund will be made only by the private placement memorandum, subscription agreement, and other relevant documentation of any such fund and should be read in their entirety.) Any investments or portfolio companies mentioned, referred to, or described are not representative of all investments in vehicles managed by a16z, and there can be no assurance that the investments will be profitable or that other investments made in the future will have similar characteristics or results. A list of investments made by funds managed by Andreessen Horowitz (excluding investments for which the issuer has not provided permission for a16z to disclose publicly as well as unannounced investments in publicly traded digital assets) is available at https://a16z.com/investments/.

Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Past performance is not indicative of future results. The content speaks only as of the date indicated. Any projections, estimates, forecasts, targets, prospects, and/or opinions expressed in these materials are subject to change without notice and may differ or be contrary to opinions expressed by others. Please see https://a16z.com/disclosures for additional important information.